Contact Map Transfer with Conditional Diffusion

Model for Generalizable Dexterous Grasp Generation

NeurIPS 2025

Abstract

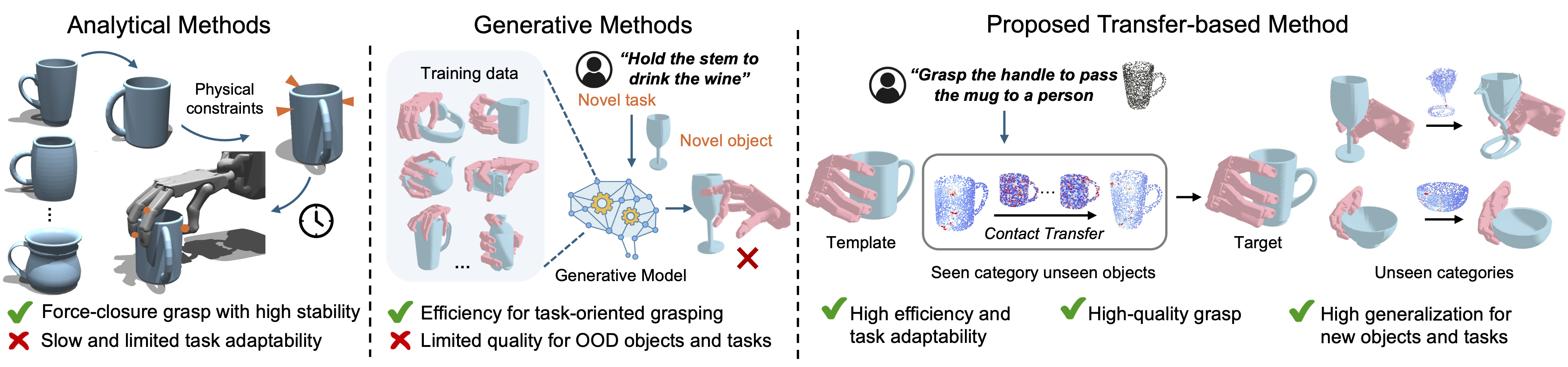

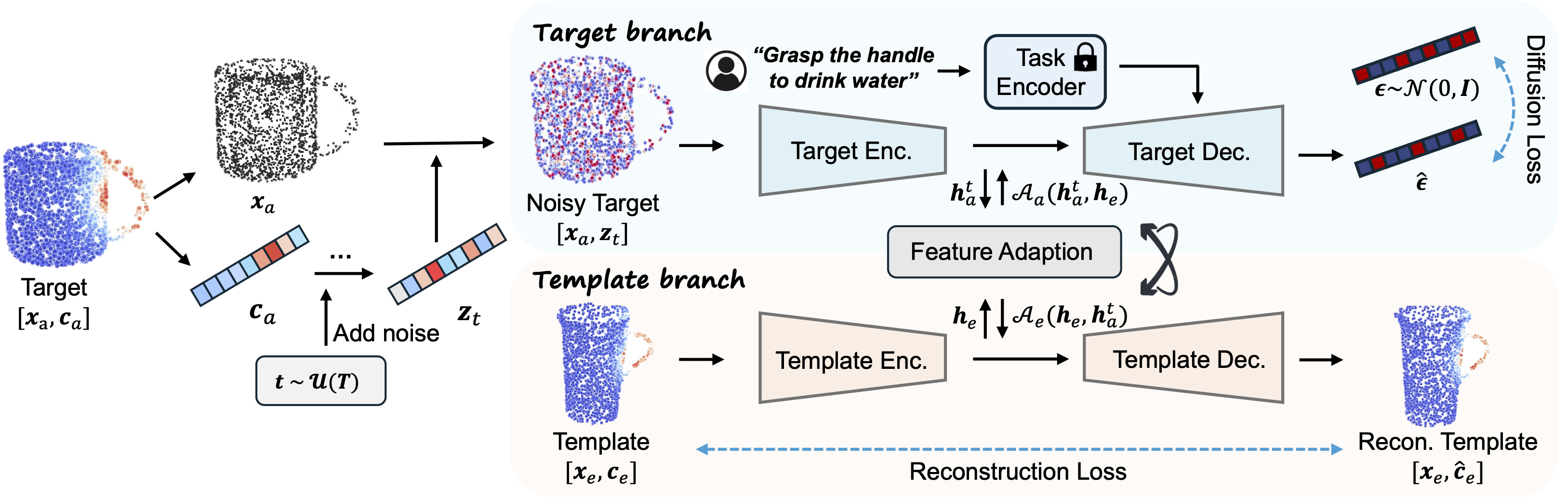

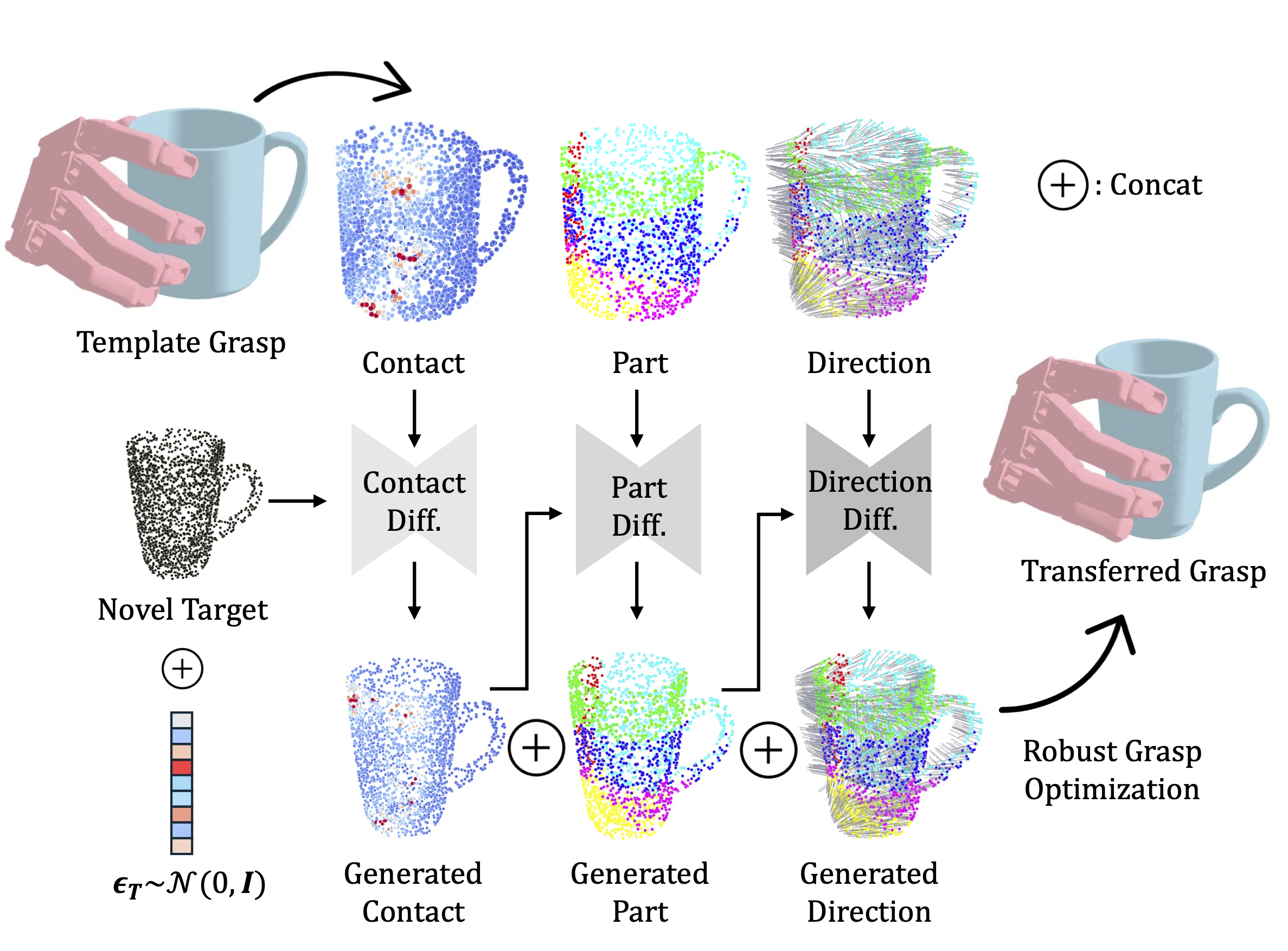

Dexterous grasp generation is a fundamental challenge in robotics, requiring both grasp stability and adaptability across diverse objects and tasks. Analytical methods ensure stable grasps but are inefficient and lack task adaptability, while generative approaches improve efficiency and task integration but generalize poorly to unseen objects and tasks due to data limitations. In this paper, we propose a transfer-based framework for dexterous grasp generation, leveraging a conditional diffusion model to transfer high-quality grasps from shape templates to novel objects within the same category. Specifically, we reformulate the grasp transfer problem as the generation of an object contact map, incorporating object shape similarity and task specifications into the diffusion process. To handle complex shape variations, we introduce a dual mapping mechanism, capturing intricate geometric relationship between shape templates and novel objects. Beyond the contact map, we derive two additional object-centric maps, the part map and direction map, to encode finer contact details for more stable grasps. We then develop a cascaded conditional diffusion model framework to jointly transfer these three maps, ensuring their intra-consistency. Finally, we introduce a robust grasp recovery mechanism, identifying reliable contact points and optimizing grasp configurations efficiently. Extensive experiments demonstrate the superiority of our proposed method. Our approach effectively balances grasp quality, generation efficiency, and generalization performance across various tasks.

Methodology

Conditional Diffusion Model for Contact Map Transfer

Cascaded Conditional Diffusion for Joint Contact, Part, and Direction Transfer

Results

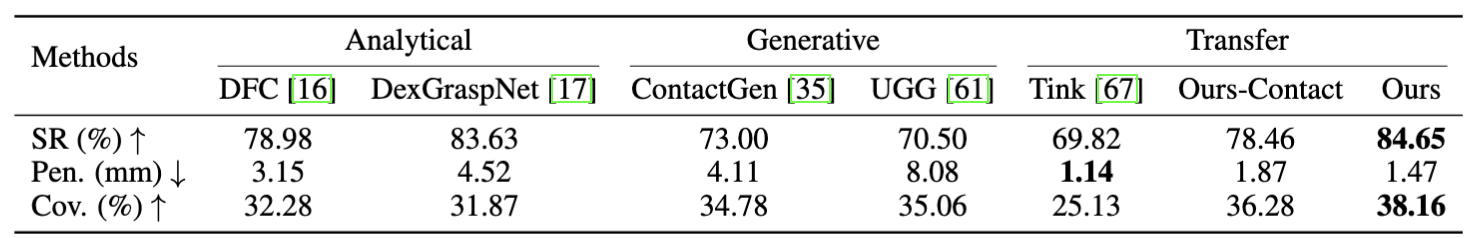

Quality evaluation in task-agnostic grasping.

As can be observed, both our method and the analytical methods achieve a high grasp success rate across various objects. It indicates that our method can effectively transfer dexterous grasps from a template to diverse novel objects, maintaining high grasp quality even without performing complex force-closure-based optimization for each novel object.

Table 1. Performance comparison with different task-agnostic grasp generation methods. The best results are in bold. Ours-Contact denotes the result with only the transferred contact map, while Ours denotes the result after using the jointly transferred contact, part and direction maps.

Generalization evaluation in task-oriented grasping.

Our method effectively transfers grasps from shape templates to diverse novel objects while maintaining high grasp quality and strong alignment with task specifications. Without requiring retraining on new object categories, our method can be directly applied to novel categories to generate stable dexterous grasps for a wide range of manipulation tasks. These results indicate the superior generalization capability of the proposed method for dexterous grasp generation.

Real world experiments

We conducted real-world dexterous grasping experiments on a self-developed humanoid robotic platform equipped with an Inspire dexterous hand mounted at the end of the robot arm. our method can effectively transfer the contact map from template objects to novel, noisy objects, and achieve an average success rate of 70% across the tested categories, demonstrating the practical applicability of our approach and its robustness to real-world observation noise.

Citation

Welcome to check our paper for more details of the research work. If you find our paper and repo useful, please consider to cite:TODO: Add citation